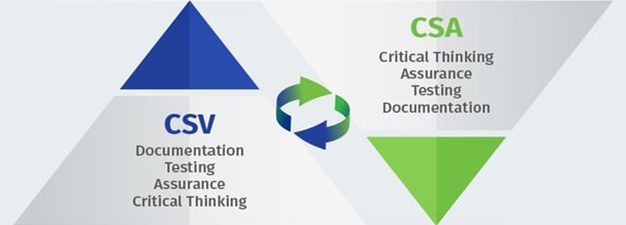

Now that the FDA guidance on computer software assurance (CSA) is available, life sciences companies that have struggled with traditional computer system validation (CSV) are eager to adopt it, and it’s easy to understand why. The modernized framework is designed to help manufacturers achieve CSV more efficiently by applying the correct level of testing to higher-risk systems instead of validating (and documenting) everything.

CSV (Test Everything) vs. CSA (Test Better)

Although subtle differences exist between the two methodologies, CSA still promotes the risk-based, least burdensome approach first endorsed in the FDA’s 2002 guidance General Principles of Software Validation. Unfortunately, many life sciences companies struggled (and still struggle) with interpreting the 2002 guidance. This lack of understanding, coupled with the fear of regulatory sanctions, has led to an unfortunate but unsurprising practice that persists to this day: overtesting and exhaustively documenting every step of the testing process “just to be sure.”

Maximizing testing efforts, regardless of risk, burdens resources and could potentially compromise patient safety. Testers often neglect or entirely miss high-risk issues when attempting to test everything. The CSA approach encourages us to take a least burdensome approach to testing by using less formal (and far less time-consuming) unscripted testing methods and automated validation planning tools.[1]

Therac-25: Adding Context to the CSA Guidance

In the late ’80s, The Therac-25, a computer-controlled radiation therapy machine produced by Atomic Energy of Canada Limited, was involved in a series of fatal overdosing incidents. The life sciences industry did not have a clear understanding of electronic records and signatures then. This was the primary reason the FDA introduced 21 CFR Part 11 in 1997, but we didn’t clearly understand how to validate. We knew that systems had to be validated to demonstrate that they performed as intended, but we had to establish a process on how to comply with Part 11. That’s how GAMP and other standards came about too.

Before Part 11’s introduction in 1997, the military leveraged verification and validation for their missile guidance systems in the 1960s and 1970s. Believe it or not, we are still performing validation based on the practices identified by the Department of Defense. Is it really necessary to validate a learning management system to the degree of rigor that originated from a missile guidance system? So, when 21 CFR Part 11 was released, we automatically defaulted to the high-risk level trying to avoid 483 observations and warning letters. And that regulation has not changed—until now.

Even though CSA is a Case for Quality initiative, which is overseen by the FDA’s Center for Devices and Radiological Health (CDRH), it’s not just for medical devices. It has potential applications for all life sciences.

Test Methodologies Commonly Used in CSA

Scripted testing requires significant preparation and follows a prescribed step-by-step method with expected results and pass/fail outcomes. It is based on preapproved protocols (IQ, OQ, and PQ) and is the traditional testing method associated with the CSV methodology. CSA advises us to reserve scripted testing for high-risk features and systems.

Qualified individuals, such as validation engineers, with education, experience, and training develop the test scripts. However, they may not be the end users. They are performing a quality control (QC) function, overseen by quality assurance (QA), on a system they may not understand, especially if the solution is new. Successful test script development requires training on the system (or the equipment, instrument, method, or process). The objective is to prove that the system consistently performs as intended and can identify invalid or altered records.

With scripted testing, the test script must be authored, optionally reviewed, and then approved (preapproval of a test protocol), likely with a QA signature, before executing the scripted test protocol. The protocol must be precisely followed. Any deviation must be recorded and processed according to the formal, effective procedure.

The FDA identifies three new test methodologies in its CSA initiative:

- Ad Hoc testing is designed to find errors and essentially break the system. Anyone can perform this testing; training is not a prerequisite, and preapproval of the protocol is unnecessary. The challenge is to capture the objective evidence in case any challenges arise in the future during an audit or inspection.

- Exploratory testing is often described as simultaneous learning, test design, and execution. Its focus is to discover, and it relies on the tester to uncover defects and think beyond the limits of the scripted tests. The purpose is to design a test, execute it immediately, observe the results, and use them to design the next test.

- Unscripted testing allows the tester to select any possible methodology to test the software. This type of testing requires no preparation, documentation, or test scripts, making it less time-consuming than traditional testing.

Additional test methodologies include:

- Positive testing checks if the application does what is expected. The idea here is to ensure that the system accepts inputs for normal use by the user.

- Negative testing evaluates a system for unpredicted circumstances and prevents the software application from crashing due to negative inputs.

- Performance testing tests the ability of the system to perform at acceptable levels.

- Security testing is conducted to ensure the system is secure.

- Boundary testing confirms that boundaries such as the upper level of quantitation (ULQ) and lower level of quantitation (LLQ), as well as values near and far beyond boundaries, are enforced.

- White box testing allows testers to inspect and verify the inner workings of a software system, such as its code, infrastructure, and integrations with external systems.

- Grey box testing tests a software product/application with partial knowledge of the application’s internal structure to identify defects due to incorrect code or improper use.

- Black box testing assesses a system from the outside without the tester knowing what’s happening within the system to generate responses to test actions.

Testing is a fundamental and crucial part of CSV, and CSA won’t change that. The hope, however, is that CSA will streamline testing by encouraging us to rely less on scripted testing and preapproved protocols and more on existing test/vendor records and automated tools in our testing efforts. In other words, CSA is designed to help us test smarter, not harder.[2]

The Root Cause of Testing Issues

The foundation of good software, or software that performs as intended, is good requirements. The same is true with testing. If you want to write good tests, you must start with good requirements. Inferior or incorrect requirements are often the root cause of a testing problem.[3]

Eliciting good requirements is critical because the system’s success depends on it. In 21 CFR Part 11, the FDA requires systems to be validated to ensure they consistently perform as intended. But what is the intended use? Simply put, it’s requirements.

User requirements (called epics or user stories in Agile methodology) define the intended use of the system. Functional requirements elaborate upon how the system must perform to meet user requirements. Design requirements elaborate upon functional requirements.

Because requirements are all interconnected, the failure of a user requirement (performance) can impact system operation (functional) down to the core (design). This domino effect can potentially impact the safety and efficacy of the system.

If we do not develop and manage requirements competently, we miss the mark with the intended use of the system, which is what validation is supposed to do—demonstrate the system performs as intended. Therefore, requirements elicitation should be baked into the software development lifecycle following the characteristics of good requirements development.

Eight Characteristics of a Good Requirement

Gathering and writing good requirements is a core competency for anyone tasked with writing test steps and test scripts. A good requirement should be:

- Unambiguous

- Testable

- Clear

- Correct

- Understandable

- Feasible

- Independent

- Atomic

These eight characteristics are a great litmus test for predicting system success. Achieve all eight, and you’re off to a good start. But it is often difficult to find requirements that meet these characteristics, which is probably why so many systems fail.

Risk-based Testing and the Least Burdensome Approach

Once you are satisfied with the requirements, it’s tempting to dive right into testing. But before we can move forward, we must consider risk and the least burdensome approach. In traditional validation, writing test scripts, conducting dry runs, and sending tests through review and approval cycles are time-consuming tasks. However, ad hoc, exploratory, and unscripted testing methods allow us to test on the fly without explicitly writing out test scripts or sending them through a lengthy preapproval process.

So, how do we capture objective evidence if we test on the fly? We can record and store video snippets at the test step level, then store them as objective evidence. For example, you can’t print out a video, but you can store a video as an electronic file, which is only possible with the help of a digital validation lifecycle management system.

Testing methodologies, such as positive, negative, white box, black box, and the rest, are familiar to computer scientists, software developers, and other technology professionals who work in the life sciences. However, formal validation has always been a vague concept that, in their minds, only quality assurance or true validation professionals understand. Since CSA expands the use of test methodologies to those technology professionals are familiar with, we can test and leverage up-stream, less-formal testing to lessen the burden of downstream formal validation.

Upstream testing is nimble and helps industry professionals zero in on high-risk systems that require formal validation. For medium-risk systems, validation rigor is at the discretion of a team of subject matter experts (SMEs) who can apply critical thinking and perform less formal testing (white box, grey box, ad hoc, and exploratory) if appropriate.

If a system is identified as low-risk, it may not require validation at all. A low-risk system generally remains unaffected by a problem, but if that problem impacts a process or a patient, the real issue may lie in risk management not having been performed correctly, or the system would not have been deemed low risk.

Leveraging Regulatory Guidance Matrixes for Risk Management

In general, risk management has always been a challenge. Some look at risk and defer to the highest level or the “the sky is falling” approach where everything is a problem and high risk. The other end of the spectrum is that nothing is critical, and there are no high-risk requirements.

Professionals tend to be biased toward low risk because validation consumes significant time and resources. Creating a good requirements management process and reference matrices of what is genuinely catastrophic, serious, not too serious, low, or of no impact helps eliminate this subjectivity.

The CSA draft guidance and GAMP 5 E2 have matrices that tell us how and when to use these test methodologies. We can use them to develop our own matrices to incorporate into our risk management processes, SOPs, and quality management systems. In this way, we leverage standards that help strengthen our processes.

What’s exciting about CSA is that automated testing has become a viable technology and is encouraged for testing systems. It’s difficult to harness technologies, validate them, and put them into production, and we hurt ourselves by creating a huge validation burden. A McKinsey report[4] states that testing and validation consumes approximately 20-30% of project development costs; this shouldn’t be. Good practice would hover around 15%, and the best practice at around 5%. But how do you achieve 5%? Use CSA and more informal testing methodologies for medium- and low-risk systems.

By leveraging regulatory guidance matrices for risk management into our processes and the expertise of a broader range of industry professionals familiar with all these tools, we can appropriately address any level of risk.

We can’t look at validation as black-and-white. It’s grey. We have all of these test methodologies and tools we can use. What’s important is to apply critical thinking and determine what’s best, based upon risk, and any other factor that merits consideration (life of system, changes to system that may increase or decrease risk over time, integration with other systems, etc.) The tools we use can be any combination or permutation, based upon a solid assessment that applies critical thinking and documents the rationale for choosing a certain strategy to validate or qualify the system.

The Case for Digital Software Validation

Covid-19 was a game-changer. Before the outbreak, convincing companies to go paperless was an arduous task. Covid-19 changed the rules. Staff had to work remotely, and paper was a host that had to be sanitized, which meant higher overhead costs. A paperless system enables teams to work and access electronic records from anywhere. It’s almost 50% more efficient to work on a digital system,[5] and automation allows us to leverage advanced technologies, as the CSA guidance encourages.

References

[1] Computer Software Assurance (CSA): What’s All the Hype? By Steve Thompson. https://www.valgenesis.com/blog/computer-software-assurance-csa-whats-all-the-hype-part-1

[2] Worried About Computer Software Assurance? Relax, You’re Probably Already Doing It. By Lisa Weeks. https://www.valgenesis.com/blog/worried-about-computer-software-assurance-relax-youre-probably-already-doing-it

[3] How Computer Software Assurance Will Impact Traditional CSV Testing. By Steve Thompson. https://www.valgenesis.com/blog/how-computer-software-assurance-csa-will-impact-traditional-software-testing

[4] Testing and validation: From hardware focus to full virtualization? November 23, 2017 | Article https://www.mckinsey.com/capabilities/operations/our-insights/testing-and-validation-from-hardware-focus-to-full-virtualization

[5] How to Calculate the ROI of Digitized Validation | eBook. https://www.valgenesis.com/resource/how-to-calculate-the-roi-of-digitized-validation